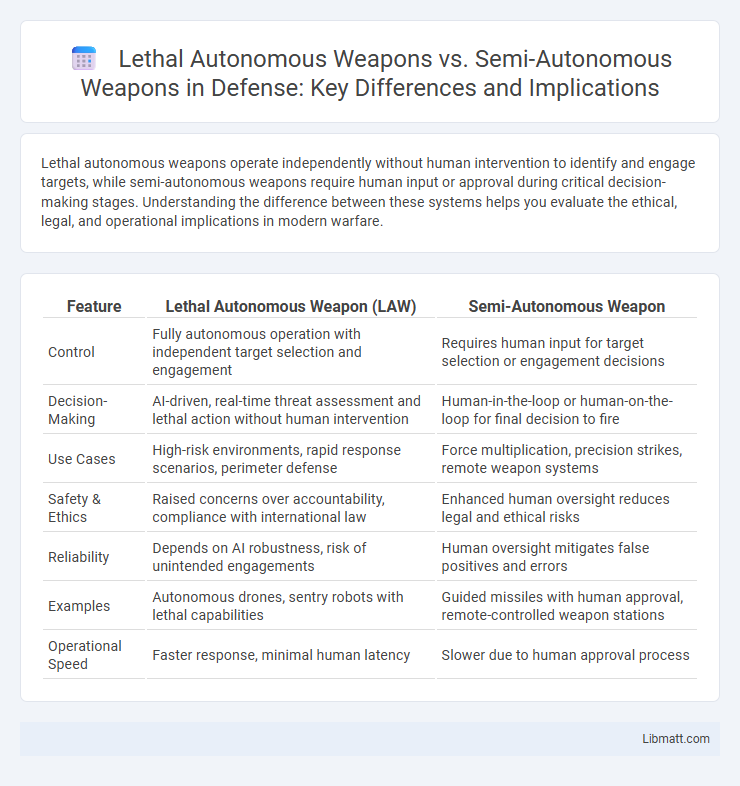

Lethal autonomous weapons operate independently without human intervention to identify and engage targets, while semi-autonomous weapons require human input or approval during critical decision-making stages. Understanding the difference between these systems helps you evaluate the ethical, legal, and operational implications in modern warfare.

Table of Comparison

| Feature | Lethal Autonomous Weapon (LAW) | Semi-Autonomous Weapon |

|---|---|---|

| Control | Fully autonomous operation with independent target selection and engagement | Requires human input for target selection or engagement decisions |

| Decision-Making | AI-driven, real-time threat assessment and lethal action without human intervention | Human-in-the-loop or human-on-the-loop for final decision to fire |

| Use Cases | High-risk environments, rapid response scenarios, perimeter defense | Force multiplication, precision strikes, remote weapon systems |

| Safety & Ethics | Raised concerns over accountability, compliance with international law | Enhanced human oversight reduces legal and ethical risks |

| Reliability | Depends on AI robustness, risk of unintended engagements | Human oversight mitigates false positives and errors |

| Examples | Autonomous drones, sentry robots with lethal capabilities | Guided missiles with human approval, remote-controlled weapon stations |

| Operational Speed | Faster response, minimal human latency | Slower due to human approval process |

Introduction to Autonomous and Semi-Autonomous Weapons

Lethal autonomous weapons operate independently, identifying and engaging targets without human intervention, leveraging advanced AI algorithms and sensor technologies to make real-time decisions. Semi-autonomous weapons require human oversight for critical functions, such as target selection and engagement approval, combining automation with human judgment to enhance precision and reduce errors. The distinction between these systems lies in their operational autonomy, with lethal autonomous weapons maximizing automation and semi-autonomous systems maintaining human control in the decision-making loop.

Key Definitions: Lethal Autonomous vs Semi-Autonomous Weapons

Lethal autonomous weapons operate independently without human intervention once activated, using artificial intelligence to identify, select, and engage targets. Semi-autonomous weapons require human input or supervision for critical functions such as target selection or weapon deployment, ensuring a degree of human control. Understanding these distinctions is crucial for assessing the ethical, legal, and strategic implications of your use or regulation of these technologies.

Core Technologies Behind Autonomous Weapon Systems

Lethal autonomous weapons rely on advanced artificial intelligence, machine learning algorithms, and sensor fusion technologies to independently identify, track, and engage targets without human intervention. Semi-autonomous weapons combine human decision-making with automated targeting systems, using technologies like remote control interfaces, assisted targeting software, and real-time data processing. Understanding these core technologies is crucial for assessing how Your military capabilities can integrate automation while maintaining ethical oversight and operational control.

Levels of Human Control and Decision-Making

Lethal autonomous weapons operate with minimal to no human intervention, relying on advanced artificial intelligence to identify, select, and engage targets independently. Semi-autonomous weapons maintain human oversight, requiring operator input for critical decisions such as target selection and engagement authorization. The primary distinction lies in the extent of human control, where lethal autonomous weapons reduce direct human decision-making, potentially raising ethical and accountability concerns.

Legal and Ethical Challenges

Lethal autonomous weapons (LAWs) face significant legal and ethical challenges due to their ability to select and engage targets without human intervention, raising concerns about accountability and compliance with international humanitarian law. Semi-autonomous weapons, which require human control for critical functions, navigate these issues more easily but still provoke debates about human oversight and decision-making responsibility. Both weapon types challenge existing legal frameworks, necessitating updated regulations to address the potential for unintended harm and the moral implications of delegating life-and-death decisions to machines.

Operational Effectiveness and Battlefield Impact

Lethal autonomous weapons operate independently with real-time decision-making capabilities, enhancing operational effectiveness by reducing human error and enabling rapid target engagement. Semi-autonomous weapons require human input for critical functions, balancing human judgment with automated precision but potentially slowing response times. Your strategic advantage on the battlefield depends on whether swift, autonomous action or controlled human oversight aligns better with mission objectives and ethical considerations.

International Laws and Treaties Governing Use

International laws and treaties governing lethal autonomous weapons (LAWs) and semi-autonomous weapons emphasize compliance with the principles of distinction, proportionality, and accountability under International Humanitarian Law (IHL). The United Nations Convention on Certain Conventional Weapons (CCW) has initiated discussions and proposed frameworks for regulating autonomous weapons, focusing on ensuring meaningful human control in weapon deployment. Semi-autonomous weapons currently face fewer legal uncertainties due to the direct human involvement in critical functions, whereas fully lethal autonomous weapons raise complex challenges regarding attribution of responsibility and adherence to existing arms control agreements.

Risks of Escalation and Unintended Consequences

Lethal autonomous weapons escalate conflict risks by independently selecting and engaging targets without human oversight, increasing chances of accidental attacks and rapid escalation. Semi-autonomous weapons retain human control in critical decision-making, reducing the likelihood of unintended engagements and providing a safeguard against unpredictable battlefield scenarios. You face higher uncertainty with fully autonomous systems, as their unpredictable behavior can trigger uncontrollable chain reactions in complex conflict environments.

Perspectives: Military vs Humanitarian Concerns

Lethal autonomous weapons operate independently without human intervention, raising significant humanitarian concerns about accountability, ethical use, and potential violations of international law. Military perspectives emphasize increased operational efficiency, faster decision-making, and reduced soldier risk, while critics stress the dangers of uncontrollable escalation and collateral damage. Your understanding of these contrasting views is crucial for evaluating policy and ethical frameworks governing weapon deployment.

The Future Landscape of Autonomous Weaponry

The future landscape of autonomous weaponry is marked by the rising use of lethal autonomous weapons capable of independent target selection and engagement, contrasting sharply with semi-autonomous weapons that require human authorization for critical actions. Your strategic planning must account for evolving ethical debates, international regulations, and technological advancements that enhance autonomy and decision-making speed. Increased reliance on AI-driven systems will redefine battlefield dynamics, emphasizing precision and reducing human intervention in combat operations.

Lethal autonomous weapon vs Semi-autonomous weapon Infographic

libmatt.com

libmatt.com