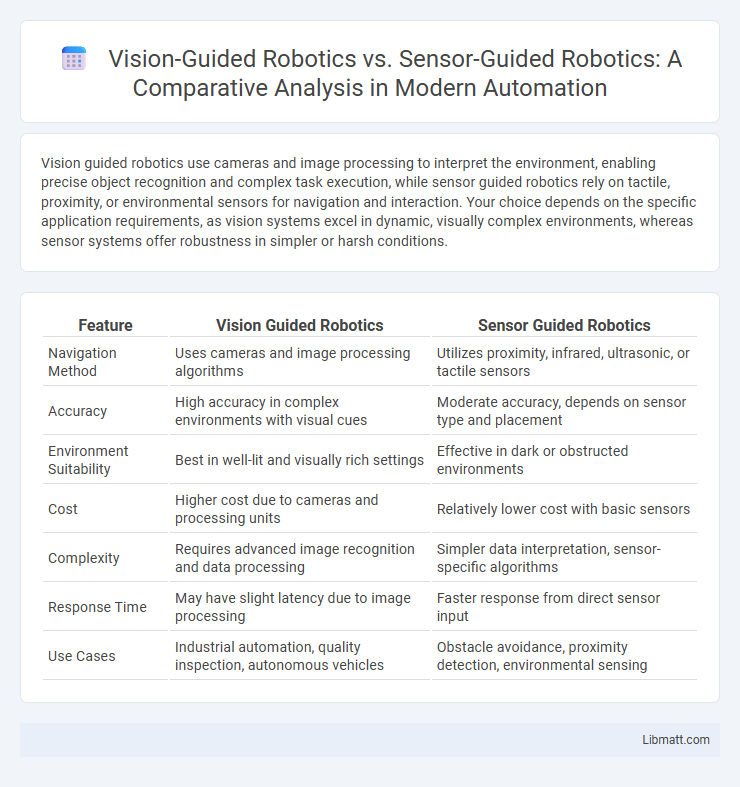

Vision guided robotics use cameras and image processing to interpret the environment, enabling precise object recognition and complex task execution, while sensor guided robotics rely on tactile, proximity, or environmental sensors for navigation and interaction. Your choice depends on the specific application requirements, as vision systems excel in dynamic, visually complex environments, whereas sensor systems offer robustness in simpler or harsh conditions.

Table of Comparison

| Feature | Vision Guided Robotics | Sensor Guided Robotics |

|---|---|---|

| Navigation Method | Uses cameras and image processing algorithms | Utilizes proximity, infrared, ultrasonic, or tactile sensors |

| Accuracy | High accuracy in complex environments with visual cues | Moderate accuracy, depends on sensor type and placement |

| Environment Suitability | Best in well-lit and visually rich settings | Effective in dark or obstructed environments |

| Cost | Higher cost due to cameras and processing units | Relatively lower cost with basic sensors |

| Complexity | Requires advanced image recognition and data processing | Simpler data interpretation, sensor-specific algorithms |

| Response Time | May have slight latency due to image processing | Faster response from direct sensor input |

| Use Cases | Industrial automation, quality inspection, autonomous vehicles | Obstacle avoidance, proximity detection, environmental sensing |

Introduction to Vision Guided Robotics

Vision guided robotics use cameras and advanced image processing algorithms to enable robots to interpret their surroundings with high precision, enhancing tasks like object recognition and navigation. These systems provide real-time feedback, allowing robots to adjust movements dynamically based on visual input, significantly improving accuracy over sensor-only guidance. Your automation process benefits from vision guided robotics by achieving greater flexibility and adaptability in complex environments where sensor guided robotics might fall short.

Overview of Sensor Guided Robotics

Sensor guided robotics rely on various sensors such as proximity, tactile, and infrared to detect and interact with the environment, enabling real-time adjustments during operation. These systems excel in tasks requiring precise environmental feedback, such as collision avoidance and object manipulation. Compared to vision guided robotics, sensor guided systems offer robust performance in low-visibility or dust-prone environments where visual data may be unreliable.

Key Technologies in Vision Guided Systems

Vision guided robotics utilizes key technologies such as advanced cameras, image processing algorithms, and machine learning to interpret visual data and enable precise object recognition, positioning, and manipulation. Sensor guided robotics primarily relies on proximity sensors, ultrasonic sensors, and tactile feedback for environment awareness, focusing more on direct physical interaction than detailed visual analysis. Your choice between the two depends on the complexity of tasks, with vision systems excelling in dynamic, unstructured environments requiring high accuracy.

Core Sensors Used in Sensor Guided Robotics

Sensor guided robotics primarily rely on devices such as ultrasonic sensors, infrared sensors, tactile sensors, and proximity sensors to navigate and interact with their environment. These core sensors provide real-time data on object distance, surface textures, and obstacle presence, enabling robots to perform tasks like collision avoidance and precise manipulation. Vision guided robotics, in contrast, use cameras and image processing algorithms, offering more complex environmental understanding but depend less on the direct physical feedback from these fundamental sensors.

Accuracy and Precision: A Comparative Analysis

Vision-guided robotics leverages advanced cameras and image processing algorithms to achieve higher accuracy and precision by continuously interpreting visual data, enabling real-time adjustments in dynamic environments. Sensor-guided robotics rely on proximity, force, or ultrasonic sensors that provide limited spatial information, often resulting in lower precision when dealing with complex tasks or variable conditions. Your choice will depend on whether the application demands fine-tuned positional control and adaptability or simpler, less computationally intense sensing solutions.

Flexibility and Adaptability in Robotics

Vision-guided robotics offers superior flexibility and adaptability by leveraging advanced cameras and image processing algorithms to interpret complex environments and adjust tasks in real-time. Sensor-guided robotics primarily relies on fixed sensors such as proximity or contact sensors, which limits their ability to adapt to unpredictable changes or diverse object variations. Your operations can benefit from vision-guided systems when needing dynamic responses to varying conditions and intricate task requirements.

Cost Considerations: Vision vs Sensor Solutions

Vision guided robotics typically involve higher upfront costs due to advanced camera systems and sophisticated image processing software, but they offer greater flexibility and accuracy in complex tasks. Sensor guided robotics generally require lower initial investment with simpler sensors like proximity or ultrasonic, suitable for straightforward applications but may lack precision in dynamic environments. Over time, vision systems can reduce operational costs by enabling adaptive automation and minimizing errors, whereas sensor-based solutions may incur additional expenses for frequent recalibration and limited functionality expansions.

Typical Applications and Use Cases

Vision guided robotics excel in applications requiring precise object recognition, complex assembly tasks, and quality inspection in automotive manufacturing and electronics production. Sensor guided robotics are typically deployed for simpler, repetitive tasks such as material handling, obstacle avoidance, and basic pick-and-place operations in warehousing and packaging industries. The integration of vision systems enables robots to adapt to dynamic environments, while sensor-based guidance offers robustness in environments with limited visual data.

Challenges and Limitations of Both Approaches

Vision-guided robotics faces challenges such as sensitivity to lighting conditions, limited depth perception, and high computational requirements for image processing algorithms, which can affect real-time performance. Sensor-guided robotics encounters limitations due to sensor drift, restricted range or field of view, and susceptibility to environmental interference like dust or electromagnetic noise. Both approaches struggle with integration complexity and calibration needs to maintain accuracy in dynamic or unstructured environments.

Future Trends in Robot Guidance Systems

Vision guided robotics leverage advanced cameras and AI algorithms to enhance real-time object recognition and spatial awareness, driving future trends toward more autonomous and adaptable robotic systems. Sensor guided robotics rely on tactile, ultrasonic, or infrared sensors to gather environmental data, with ongoing developments focusing on improving sensor fusion and precision in complex environments. Your choice between these technologies will depend on the specific application requirements, as hybrid systems incorporating both vision and sensor-based guidance are becoming increasingly prevalent in modern robotics.

Vision guided robotics vs Sensor guided robotics Infographic

libmatt.com

libmatt.com