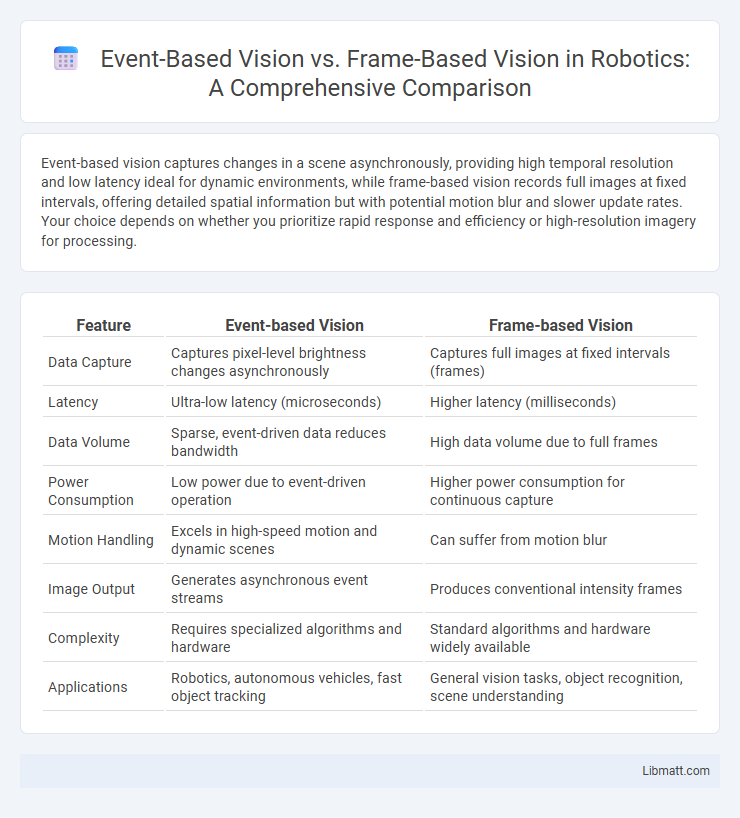

Event-based vision captures changes in a scene asynchronously, providing high temporal resolution and low latency ideal for dynamic environments, while frame-based vision records full images at fixed intervals, offering detailed spatial information but with potential motion blur and slower update rates. Your choice depends on whether you prioritize rapid response and efficiency or high-resolution imagery for processing.

Table of Comparison

| Feature | Event-based Vision | Frame-based Vision |

|---|---|---|

| Data Capture | Captures pixel-level brightness changes asynchronously | Captures full images at fixed intervals (frames) |

| Latency | Ultra-low latency (microseconds) | Higher latency (milliseconds) |

| Data Volume | Sparse, event-driven data reduces bandwidth | High data volume due to full frames |

| Power Consumption | Low power due to event-driven operation | Higher power consumption for continuous capture |

| Motion Handling | Excels in high-speed motion and dynamic scenes | Can suffer from motion blur |

| Image Output | Generates asynchronous event streams | Produces conventional intensity frames |

| Complexity | Requires specialized algorithms and hardware | Standard algorithms and hardware widely available |

| Applications | Robotics, autonomous vehicles, fast object tracking | General vision tasks, object recognition, scene understanding |

Introduction to Event-Based and Frame-Based Vision

Event-based vision captures changes in a scene asynchronously by detecting pixel-level brightness variations, allowing for ultra-fast response times and reduced data redundancy. Frame-based vision records entire scenes at fixed intervals, producing a sequence of static images widely used in traditional cameras and video processing. Your choice between these technologies depends on application needs for temporal resolution, power efficiency, and data volume.

Fundamental Concepts of Visual Sensing

Event-based vision captures changes in a scene asynchronously by detecting individual pixel-level brightness variations, offering high temporal resolution and low latency. Frame-based vision records the entire scene at fixed intervals, creating discrete frames that can lead to motion blur and redundant data during static moments. For your applications requiring fast response times and dynamic environments, event-based sensors provide efficient and precise visual sensing through continuous, event-driven data streams.

How Event-Based Vision Works

Event-based vision systems use dynamic vision sensors that capture changes in brightness at each pixel asynchronously, generating data only when a change occurs. This approach significantly reduces latency and power consumption while enabling high temporal resolution for detecting fast motion and complex scenes. Your applications benefit from real-time responsiveness and efficient data processing compared to traditional frame-based vision that captures entire scenes at fixed intervals.

Principles of Frame-Based Vision

Frame-based vision captures images as a series of discrete frames at fixed intervals, relying on pixel intensity values to analyze visual scenes. This method processes complete snapshots, which can lead to redundant data and slower response times in dynamic environments. High-resolution sensors and consistent frame rates are essential for achieving accurate motion detection and image recognition in frame-based vision systems.

Key Differences Between Event-Based and Frame-Based Vision

Event-based vision captures dynamic scenes by detecting changes in pixel intensity asynchronously, enabling high temporal resolution and low latency. Frame-based vision relies on capturing full images at fixed intervals, resulting in higher data redundancy and slower reaction to fast motion. Event-based sensors excel in capturing rapid movements with reduced power consumption, while frame-based cameras provide detailed spatial information suitable for static or slow-moving environments.

Advantages of Event-Based Vision Systems

Event-based vision systems offer significant advantages including ultra-low latency and high dynamic range, making them ideal for fast-moving scenes and challenging lighting conditions. These systems efficiently capture changes in a scene rather than entire frames, reducing data redundancy and power consumption compared to traditional frame-based cameras. Event-based sensors excel in real-time applications such as robotics, autonomous vehicles, and surveillance where rapid response and energy efficiency are critical.

Limitations of Frame-Based Vision Technology

Frame-based vision technology suffers from limited temporal resolution, causing motion blur and delayed response in dynamic environments. It generates large amounts of redundant data by capturing entire scenes at fixed intervals, leading to inefficient processing and increased power consumption. These constraints hinder real-time applications such as robotics, autonomous driving, and high-speed object tracking.

Real-World Applications of Event-Based Vision

Event-based vision excels in real-time applications such as autonomous driving, robotics, and industrial automation by capturing high-speed motion with low latency and minimal data redundancy. Its ability to detect dynamic changes in challenging lighting conditions enables advanced drone navigation, surveillance systems, and augmented reality devices. These advantages make event-based sensors crucial for environments requiring fast and efficient visual processing beyond the capabilities of traditional frame-based cameras.

Challenges in Adopting Event-Based Vision

Event-based vision faces challenges such as limited availability of standardized datasets and the need for specialized algorithms to process asynchronous data streams. Hardware compatibility issues and higher implementation costs hinder widespread adoption compared to mature frame-based vision systems. Integration into existing computer vision pipelines remains complex due to differences in data representation and temporal resolution.

Future Outlook: Event-Based vs Frame-Based Vision

Event-based vision offers substantial advantages for future applications requiring low latency and high dynamic range, particularly in autonomous vehicles, robotics, and augmented reality. Frame-based vision continues to evolve with advancements in sensor resolution and computational power, maintaining its dominance in areas like image recognition and video processing. Combining event-based sensors with frame-based systems is becoming a promising direction, enabling more robust and efficient computer vision solutions.

Event-based vision vs Frame-based vision Infographic

libmatt.com

libmatt.com