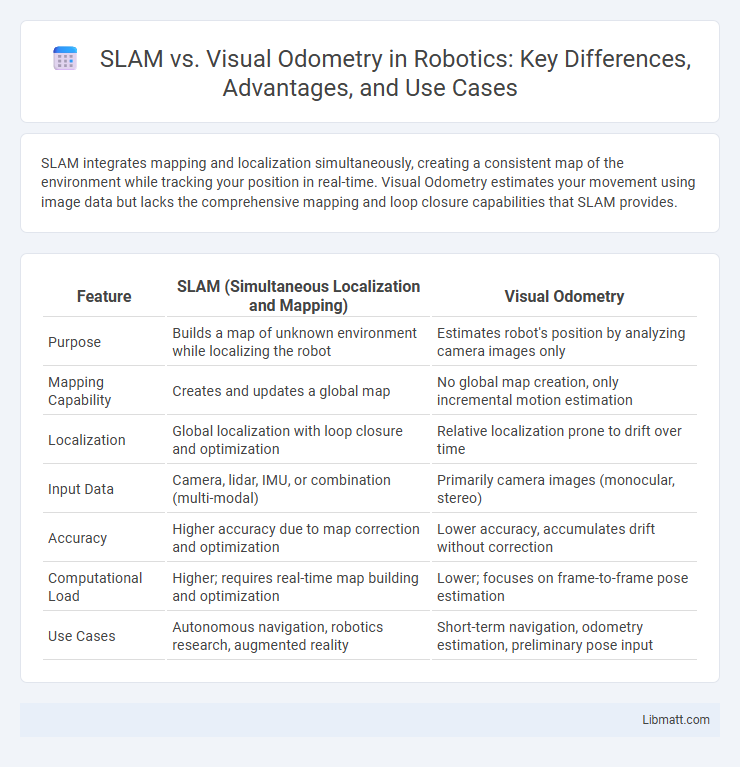

SLAM integrates mapping and localization simultaneously, creating a consistent map of the environment while tracking your position in real-time. Visual Odometry estimates your movement using image data but lacks the comprehensive mapping and loop closure capabilities that SLAM provides.

Table of Comparison

| Feature | SLAM (Simultaneous Localization and Mapping) | Visual Odometry |

|---|---|---|

| Purpose | Builds a map of unknown environment while localizing the robot | Estimates robot's position by analyzing camera images only |

| Mapping Capability | Creates and updates a global map | No global map creation, only incremental motion estimation |

| Localization | Global localization with loop closure and optimization | Relative localization prone to drift over time |

| Input Data | Camera, lidar, IMU, or combination (multi-modal) | Primarily camera images (monocular, stereo) |

| Accuracy | Higher accuracy due to map correction and optimization | Lower accuracy, accumulates drift without correction |

| Computational Load | Higher; requires real-time map building and optimization | Lower; focuses on frame-to-frame pose estimation |

| Use Cases | Autonomous navigation, robotics research, augmented reality | Short-term navigation, odometry estimation, preliminary pose input |

Introduction to SLAM and Visual Odometry

SLAM (Simultaneous Localization and Mapping) and Visual Odometry are essential techniques in robotics and computer vision for navigation and environment mapping. SLAM constructs a map of unknown surroundings while simultaneously estimating the robot's position, using sensor data such as cameras or LiDAR. Visual Odometry estimates a device's incremental motion by analyzing sequential camera images, which helps You understand movement but lacks the comprehensive mapping capabilities of full SLAM systems.

Core Principles of SLAM

Simultaneous Localization and Mapping (SLAM) integrates sensor data to build a map of an unknown environment while tracking the device's position within it, using techniques like probabilistic state estimation and loop closure detection. Visual Odometry (VO) estimates motion by analyzing sequential camera images to track changes in position without constructing a global map. SLAM's core principles hinge on the fusion of sensor inputs, real-time map updating, and error correction methods to enable autonomous navigation in dynamic and unstructured settings.

Fundamental Concepts of Visual Odometry

Visual Odometry estimates your camera's motion by analyzing sequential image frames to track feature points and calculate relative displacement. Unlike SLAM, which simultaneously builds a map of the environment and localizes the device, Visual Odometry focuses solely on motion estimation without creating a global map. This fundamental approach allows for quick and efficient pose estimation, especially in applications where real-time performance and simplicity are crucial.

Key Differences Between SLAM and Visual Odometry

SLAM (Simultaneous Localization and Mapping) creates a map of an unknown environment while simultaneously tracking the device's position, whereas Visual Odometry solely estimates the device's motion through consecutive camera images without generating a global map. SLAM integrates sensor data to correct drift and improve localization accuracy, making it suitable for complex navigation tasks, while Visual Odometry relies on frame-to-frame analysis, prone to cumulative errors over time. The key difference lies in SLAM's ability to build and update a spatial representation of surroundings, enabling loop closure and long-term consistency unlike the short-term tracking focus of Visual Odometry.

Types of Sensors Used in SLAM and Visual Odometry

SLAM systems typically utilize a combination of sensors such as LiDAR, RGB-D cameras, and IMUs to capture detailed environmental and motion data for accurate mapping and localization. Visual Odometry primarily relies on monocular, stereo, or RGB-D cameras to estimate the trajectory of a moving camera by analyzing sequential image frames. Sensor fusion in SLAM enhances robustness and precision by integrating diverse data sources, whereas visual odometry focuses mainly on visual input for motion estimation.

Accuracy and Robustness Comparison

SLAM (Simultaneous Localization and Mapping) generally provides higher accuracy and robustness compared to Visual Odometry (VO) due to its ability to build and update a consistent map while correcting drift over time. Visual Odometry estimates motion incrementally without global map correction, which can lead to cumulative errors and reduced robustness in challenging environments with dynamic scenes or poor textures. For your applications requiring precise navigation and long-term stability, SLAM offers superior performance by integrating loop closure and global optimization techniques.

Use Cases for SLAM

SLAM (Simultaneous Localization and Mapping) is essential for use cases requiring real-time mapping and localization in unknown environments, such as autonomous robots, drone navigation, and augmented reality applications. It integrates sensor data to build comprehensive 3D maps while simultaneously tracking the device's position, enabling robust navigation in dynamic or GPS-denied areas. Your systems benefit from SLAM's ability to continuously update spatial awareness and facilitate obstacle avoidance in complex, unstructured settings where Visual Odometry alone may fall short.

Applications of Visual Odometry

Visual Odometry is widely utilized in autonomous vehicles, robotics, and augmented reality to estimate a camera's motion by analyzing image sequences, enabling precise navigation in GPS-denied environments. Its applications extend to drone flight control, where real-time position tracking ensures stable and accurate movement, and to mobile devices, enhancing user experience through augmented reality and location-based services. Your ability to leverage visual odometry technology can significantly improve the accuracy and reliability of motion estimation in dynamic and complex environments.

Challenges in Implementation

Implementing SLAM faces challenges such as handling large-scale environments, managing computational complexity, and maintaining map consistency during loop closures. Visual Odometry struggles with feature tracking in low-texture or dynamic scenes, leading to drift accumulation over time without global map correction. Both techniques require robust sensor calibration and real-time processing capabilities to achieve accurate and reliable localization.

Future Trends in SLAM and Visual Odometry

Future trends in SLAM and Visual Odometry emphasize the integration of deep learning techniques to enhance robustness in dynamic and complex environments. Real-time 3D mapping combined with multi-sensor fusion, including LiDAR and IMU data, is advancing to improve accuracy and scalability in autonomous systems. Emerging research focuses on lightweight algorithms optimized for edge computing, enabling efficient deployment on mobile robots and augmented reality devices.

SLAM vs Visual Odometry Infographic

libmatt.com

libmatt.com