Fan-out refers to the number of components or modules a single output influences, impacting system complexity and signal load management. Fan-in, on the other hand, describes how many inputs a single component or module receives, affecting integration and processing efficiency in your design.

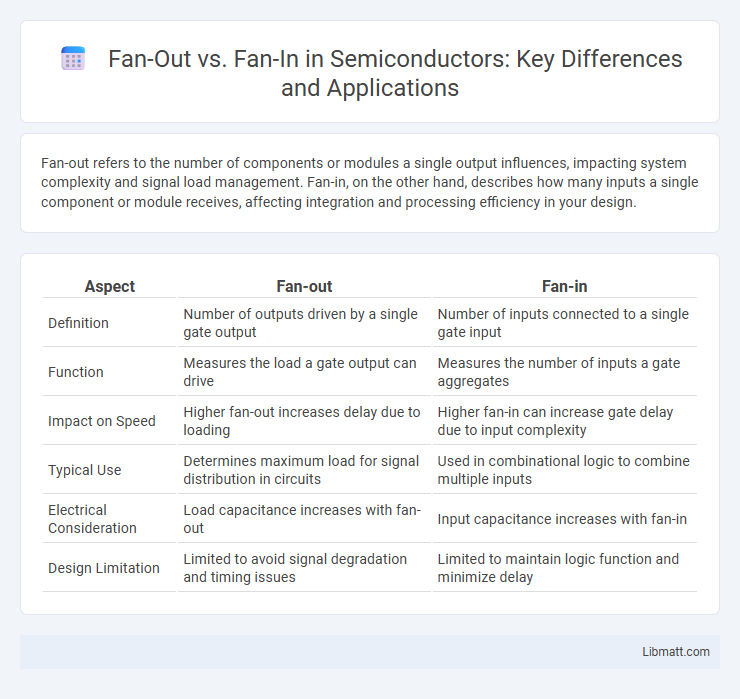

Table of Comparison

| Aspect | Fan-out | Fan-in |

|---|---|---|

| Definition | Number of outputs driven by a single gate output | Number of inputs connected to a single gate input |

| Function | Measures the load a gate output can drive | Measures the number of inputs a gate aggregates |

| Impact on Speed | Higher fan-out increases delay due to loading | Higher fan-in can increase gate delay due to input complexity |

| Typical Use | Determines maximum load for signal distribution in circuits | Used in combinational logic to combine multiple inputs |

| Electrical Consideration | Load capacitance increases with fan-out | Input capacitance increases with fan-in |

| Design Limitation | Limited to avoid signal degradation and timing issues | Limited to maintain logic function and minimize delay |

Introduction to Fan-out and Fan-in

Fan-out measures the number of downstream components or modules a single component influences, impacting system complexity and maintainability. Fan-in refers to how many components or modules call or depend on a single module, indicating code reusability and potential bottlenecks. Understanding your system's fan-out and fan-in helps optimize design, improve scalability, and reduce error propagation.

Defining Fan-out: Meaning and Context

Fan-out refers to the number of downstream components or modules that a single module or component directly communicates with or influences in a software system or digital circuit. It measures the branching factor, indicating how many other units depend on or are triggered by the output of a specific component. High fan-out can imply increased complexity and potential maintainability challenges due to tighter coupling between modules.

Understanding Fan-in: Core Concepts

Fan-in refers to the number of input signals or data paths directed towards a particular node, component, or system, highlighting its capacity to handle multiple inputs simultaneously. High fan-in values indicate greater processing ability but can increase complexity and potential data bottlenecks. Understanding your system's fan-in helps optimize performance by balancing input concurrency with efficient data management.

Key Differences Between Fan-out and Fan-in

Fan-out refers to the number of downstream modules or components a single module interacts with, indicating its influence on subsequent processes, while Fan-in measures the number of upstream modules that feed into a single module, reflecting its dependency level. A high Fan-out suggests greater complexity and potential maintenance challenges due to multiple connections, whereas a high Fan-in indicates significant reliance on other modules, impacting reusability and modularity. Understanding these key differences is crucial for designing scalable software architectures and managing coupling between components effectively.

Fan-out in Hardware and Software Systems

Fan-out in hardware systems refers to the maximum number of input loads that a single output can drive without signal degradation, crucial for maintaining signal integrity in digital circuits. In software architecture, fan-out measures the number of modules or components a particular module depends on, impacting system complexity and maintainability. Optimizing fan-out involves balancing connectivity to prevent performance bottlenecks and reduce coupling between components in both hardware and software contexts.

Applications of Fan-in in Modern Architectures

Fan-in in modern architectures significantly enhances system reliability by consolidating multiple input signals into a single processing unit, enabling efficient data aggregation from diverse sources. It plays a critical role in microservices, where numerous service responses must converge for coherent results, improving fault tolerance and resource optimization. Your ability to manage fan-in effectively ensures streamlined communication pathways and optimized performance in distributed systems.

Performance Implications of Fan-out and Fan-in

Fan-out impacts system performance by increasing the workload on a single source as it distributes requests to multiple destinations, potentially leading to higher latency and resource contention. Fan-in consolidates multiple inputs into a single process, which can create bottlenecks if the aggregation point becomes overwhelmed, affecting throughput and response time. Optimizing your architecture requires balancing fan-out and fan-in to minimize delays and maximize efficient resource utilization in distributed systems.

Scalability Considerations: Fan-out vs Fan-in

Fan-out architecture enhances scalability by distributing workloads across multiple instances, reducing bottlenecks and improving system responsiveness during high demand. Fan-in mechanisms aggregate data or requests from various sources into a single point, which can create potential performance constraints if not properly managed with load balancing or parallel processing. Effective scalability requires balancing fan-out's ability to scale horizontally with fan-in's capacity to handle converging data streams without becoming a system bottleneck.

Common Challenges and Best Practices

Fan-out and fan-in patterns often face challenges such as managing task dependencies, handling failures gracefully, and ensuring efficient load distribution across multiple parallel processes. Best practices include implementing robust error handling mechanisms, using asynchronous messaging queues to decouple processes, and monitoring resource utilization to prevent bottlenecks during high fan-out or fan-in volumes. Employing idempotent operations and retry strategies enhances reliability, while centralized logging and tracing tools improve visibility across complex asynchronous workflows.

Choosing the Right Approach: Fan-out or Fan-in

Selecting the optimal approach between fan-out and fan-in depends on the specific system requirements and workload characteristics. Fan-out excels in parallelizing tasks by distributing workloads across multiple services, enhancing scalability and reducing latency in microservices architectures. Fan-in, conversely, consolidates responses from diverse sources, simplifying data aggregation and improving fault tolerance in event-driven systems or data processing pipelines.

Fan-out vs Fan-in Infographic

libmatt.com

libmatt.com