HRL (Hierarchical Reinforcement Learning) improves learning efficiency by breaking complex tasks into smaller subtasks, while SRD (Subroutine Discovery) focuses on automatically identifying reusable skills or behaviors within an environment. Understanding the differences between HRL and SRD can help you optimize your reinforcement learning models for better performance and adaptability.

Table of Comparison

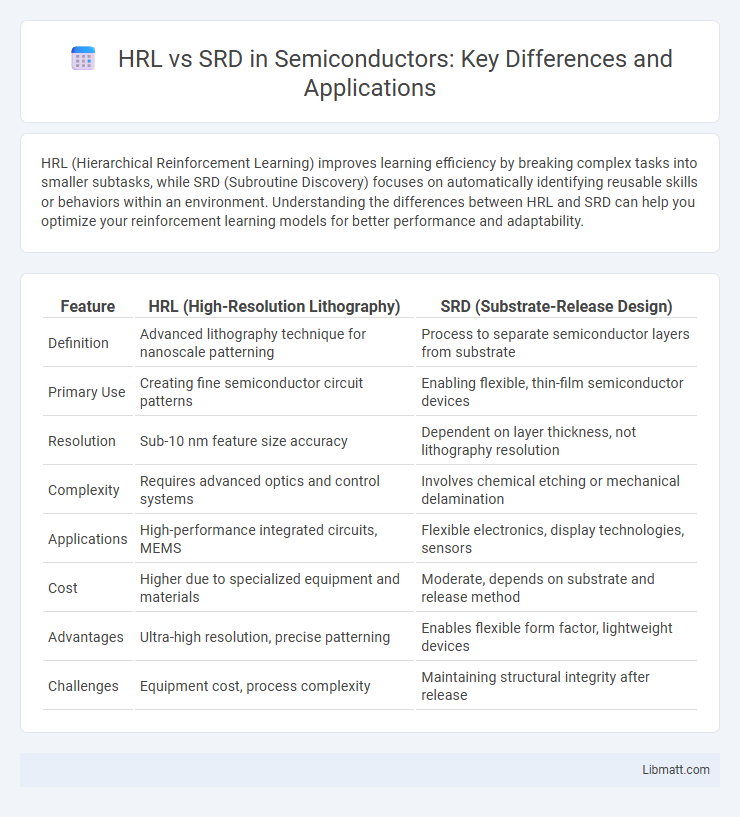

| Feature | HRL (High-Resolution Lithography) | SRD (Substrate-Release Design) |

|---|---|---|

| Definition | Advanced lithography technique for nanoscale patterning | Process to separate semiconductor layers from substrate |

| Primary Use | Creating fine semiconductor circuit patterns | Enabling flexible, thin-film semiconductor devices |

| Resolution | Sub-10 nm feature size accuracy | Dependent on layer thickness, not lithography resolution |

| Complexity | Requires advanced optics and control systems | Involves chemical etching or mechanical delamination |

| Applications | High-performance integrated circuits, MEMS | Flexible electronics, display technologies, sensors |

| Cost | Higher due to specialized equipment and materials | Moderate, depends on substrate and release method |

| Advantages | Ultra-high resolution, precise patterning | Enables flexible form factor, lightweight devices |

| Challenges | Equipment cost, process complexity | Maintaining structural integrity after release |

Understanding HRL and SRD: Definitions and Core Concepts

Hierarchical Reinforcement Learning (HRL) structures complex tasks into simpler subtasks or hierarchies, enabling efficient learning and decision-making. Skill Reward Design (SRD) focuses on optimizing rewards to enhance specific skill acquisition within reinforcement learning frameworks. Your grasp of HRL's decomposition and SRD's reward customization is essential for leveraging their combined potential in advanced AI systems.

Key Differences Between HRL and SRD

Hierarchical Reinforcement Learning (HRL) structures learning tasks into multiple levels of abstraction, enabling complex decision-making by decomposing tasks into sub-tasks, while Stochastic Reward Design (SRD) focuses on optimizing reward functions under uncertainty to guide agent behavior. HRL improves scalability and efficiency in environments with extended temporal dependencies, whereas SRD prioritizes robustness in reward assignment amidst noisy or ambiguous feedback. The key difference lies in HRL's emphasis on hierarchical policy learning versus SRD's approach to probabilistic reward modeling for improved exploration and adaptation.

Advantages and Disadvantages of HRL

Hierarchical Reinforcement Learning (HRL) offers significant advantages by breaking complex tasks into manageable sub-tasks, improving learning efficiency and enabling faster convergence in environments with sparse rewards. HRL allows better policy transferability across related tasks but introduces challenges such as increased algorithmic complexity, difficulty in designing optimal hierarchies, and potential propagation of suboptimal decisions through the hierarchy. Compared to Standard Reinforcement Learning (SRL), HRL excels in scalability but may require extensive domain knowledge to define effective sub-goals and hierarchy structures.

Strengths and Limitations of SRD

SRD excels in structured task execution by mapping specific actions to predefined rules, enhancing consistency and predictability in controlled environments. Its limitation lies in poor adaptability to complex, dynamic scenarios where flexible decision-making and learning are essential, often resulting in rigid, suboptimal outcomes. Compared to HRL, which leverages hierarchical abstraction to manage complexity and improve learning efficiency, SRD lacks the capability to generalize across varied tasks or transfer knowledge effectively.

Common Applications of HRL in Industry

HRL (Hierarchical Reinforcement Learning) finds widespread applications in robotics for complex task automation and autonomous navigation, improving efficiency by decomposing tasks into manageable sub-goals. In natural language processing, HRL enhances dialogue systems by structuring conversations into hierarchical intents, boosting user interaction quality. HRL is also instrumental in supply chain optimization, where multi-level decision-making processes improve logistics, inventory management, and resource allocation.

Typical Uses of SRD Across Sectors

SRD (Software Requirements Document) is extensively used across industries such as finance, healthcare, and manufacturing to ensure clear communication of system specifications and user needs. In finance, SRD guides the development of secure, compliant transaction systems, while in healthcare it helps define requirements for patient data management and regulatory adherence. Manufacturing leverages SRD to detail automation processes and machinery controls, facilitating efficient development and deployment of software solutions.

HRL vs SRD: Performance Comparison

HRL (Hierarchical Reinforcement Learning) demonstrates improved performance in complex, multi-level decision-making tasks by breaking down problems into manageable sub-goals, whereas SRD (Standard Reinforcement Learning) often struggles with scalability and slower convergence. Your applications requiring efficient learning in high-dimensional environments typically benefit more from HRL's structured approach, which accelerates training and enhances policy effectiveness. Empirical studies reveal HRL consistently outperforms SRD in environments like robotics and strategic games, delivering higher rewards and better adaptability.

Decision-Making Factors: Choosing HRL or SRD

Choosing between Hierarchical Reinforcement Learning (HRL) and State-Representation Design (SRD) depends heavily on the complexity of the task and the level of abstraction required. HRL is ideal for problems with nested or multi-level decision processes, enabling efficient learning by breaking tasks into subtasks. Your decision should consider whether modularity and scalability are paramount, as HRL supports these through hierarchical policies, whereas SRD emphasizes designing informative state spaces for more straightforward, flat decision-making models.

Case Studies Highlighting HRL and SRD in Practice

Case studies showcasing HRL (Hierarchical Reinforcement Learning) demonstrate its effectiveness in complex tasks like robotic manipulation and autonomous driving by breaking down goals into manageable subtasks. SRD (State-Reward Decomposition) is illustrated in applications such as personalized recommendation systems, where decomposing state and reward structures enhances learning efficiency and adaptability. Your understanding of these methodologies deepens by analyzing practical implementations revealing their unique strengths in optimizing decision-making processes.

Future Trends: The Evolution of HRL and SRD

Future trends in HRL (Hierarchical Reinforcement Learning) and SRD (Semantic Role Disambiguation) showcase rapid advancements driven by increased computational power and novel algorithms. HRL is evolving towards more scalable and interpretable frameworks that structure complex decision-making tasks into manageable sub-goals, improving efficiency in real-world applications. Your ability to leverage SRD advancements enhances natural language processing by precisely identifying semantic roles, enabling deeper contextual understanding and more accurate AI-driven communication.

HRL vs SRD Infographic

libmatt.com

libmatt.com